Junk Science Has Stripped Thousands of New Yorkers of Their Freedom

The "Risk Assessment Instrument" is costing people their liberty for no good reason.

Tens of thousands of New Yorkers are languishing behind bars, having their freedom to move heavily restricted, or even being forced into homelessness based on the results of a so-called “risk assessment tool” that uses outdated, junk science. The consequences of these assessments are dire. When formerly incarcerated people face unnecessary onerous restrictions, it can undermine their ability to reintegrate back into society. And when the tool fails in the opposite direction, too little oversight of other individuals can create safety concerns for families and children.

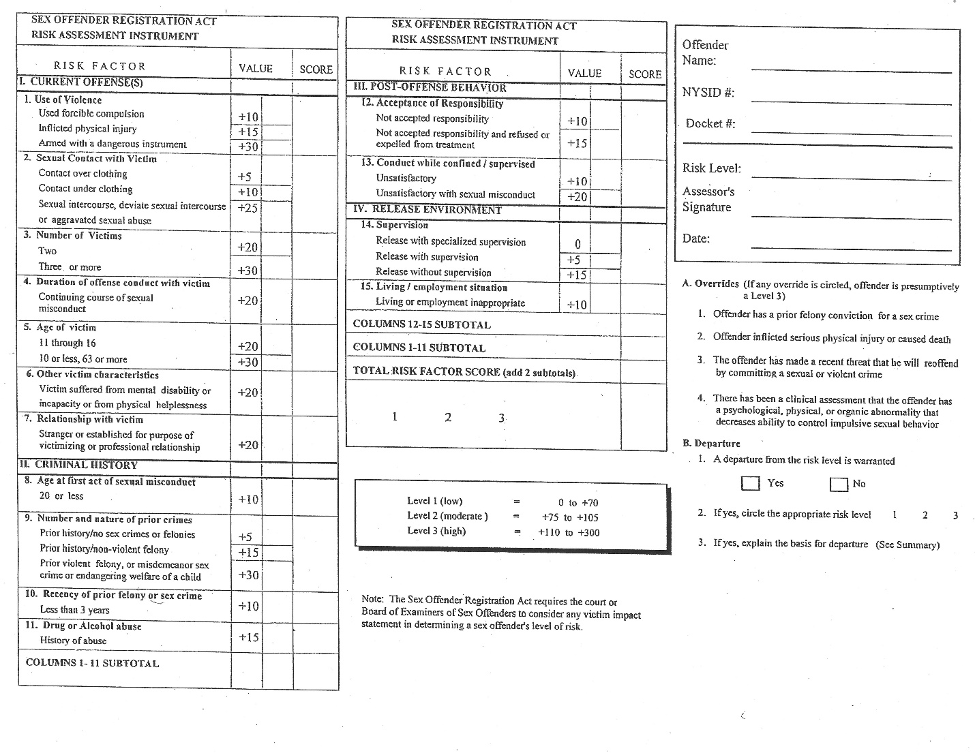

The Risk Assessment Instrument (RAI) is used by the New York State Board of Examiners of Sex Offenders to assess the risk that those convicted of sex offenses will sexually reoffend and to estimate the magnitude of harm caused by any reoffending. Based on various criteria, the RAI spits out a score that will determine whether someone convicted of a sex offense is a level one (low risk) level two (medium risk) or level three (high risk) to re-offend.

The RAI was introduced back in 1996, but it hasn’t been updated since. And the criteria it uses is based on research that dates as far back as 50 years ago. Meanwhile, the most significant research on the factors linked to recidivism was published within the last two decades.

This means that the RAI is both overinclusive of factors with no known correlation to risk and underinclusive of factors that researchers have consistently shown are correlated to an elevated risk. Only six of the 15 factors used on the RAI Factors (1: Use of Violence, 8: Age at First Sex Crime, 9: Number and Nature of Prior Crimes, 11: Drug or Alcohol Abuse, 13: Conduct While Confined or Under Supervision, and 15: Living or Employment Situation) are generally correlated to risk.

The RAI is also fundamentally flawed in that it assigns arbitrary weights to these factors and assigns arbitrary ranges to the three different risk levels. For example, the scoring range for low risk is 0 to 70 points, while the range for the highest risk level is 110 to 300 points.

The poorly designed, antiquated RAI has never been tested to determine how well it actually predicts risk and the one time it was examined, researchers discovered its ability to predict recidivism was about as good as chance. Even more shocking, its ability to determine the magnitude of future harm was worse than chance. That means the RAI’s predictions were proven accurate less than 50 percent of the time.

The instrument has also never been tested to ensure that it isn’t racially biased. In fact, in 2006, the NYCLU issued a report finding that Black people were overrepresented in the highest risk level.

These scores, which must be approved by a judge after a judicial hearing, have enormous consequences for tens of thousands of New Yorkers. If someone is labeled a level three offender, they are severely restricted in where they can live, work, and visit. People categorized as level three offenders can’t live within 1,000 feet of a school, for example.

In dense urban areas like New York City, this makes it almost impossible to find housing where people convicted of sex offenses can legally live. In hundreds of cases, people convicted of sex offenses have been held past their release date because they could not find a place to live that met all the restrictions. These New Yorkers have been kept for months or even years past their release date. They are often held in prisons, or under prison-like conditions, until they either find housing or their parole term ends.

The RAI doesn’t just lead to the Board unfairly taking people’s freedom. The restrictions placed on people labeled level three offenders — which can include lifetime surveillance due to placement on the online registry, as well as exclusion from public housing — make it much harder for them to successfully reintegrate into society.

There’s no proof these restrictions improve public safety. As New York Focus recently noted, studies in Missouri, Michigan, and Florida show these restrictions have had little or no effect on sex offense recidivism. The Justice Department also admits there is “no empirical support for the effectiveness” of these restrictions.

It’s bad enough that these policies, which are extremely harmful to people returning to their communities, have no positive impacts. But it’s even worse that these restrictions are placed on people based on how they’re scored using a useless and out of date algorithm.

The risk assessment hearings in which the RAI is used have other flaws as well. Recently, the NYCLU, Restorative Action Alliance, and academic scholars filed a friend of the court brief in the case People v. Cotto, arguing that when the risk assessment hearings take place before an individual has any prospect of release into the community it violates their right to due process. This is because hearings in those circumstances lead to people being assessed based on evidence that is stale by the time they are actually released into the community.

The RAI is just one of many algorithmic tools that have sprung up across many parts of the criminal legal system. There are also risk assessment tools that purportedly help determine who should be let out on bail before trial, how long someone should be sentenced, who should be granted parole, and which people are most likely to commit crimes.

Tools used for all of these purposes have been found to be racially biased. They overestimate, for example, how many Black people will be re-arrested when out on bail, and underestimate how many white people will do the same.

But this bias gets hidden behind a supposedly objective, mathematical, “colorblind” algorithm. Much like the RAI, these algorithms are often untested, and the factors they analyze are often kept secret on the grounds that the algorithms themselves are proprietary.

But the results are anything but objective. They ensure that more Black and Brown people get locked up for longer, with no public safety or societal benefit.

As society becomes more reliant on algorithms and various assessment instruments, policymakers need to ensure that these tools use real evidence, not junk science. They also must prevent these instruments from further exacerbating racial disparities that are already rampant in our criminal legal system.